I'm Living the AI Disruption Everyone's Warning About

Matt Shumer warns AI disruption is coming. I'm writing to confirm: it's not coming — it's here. And I've been living it for over a year.

Last week, I read an article warning that AI is about to disrupt knowledge work. The irony? My AI assistant summarized it for me, extracted action items, and drafted this response — all while I made coffee.

Matt Shumer, an AI startup founder, wrote a piece called "Something Big Is Happening" that's been making rounds. His core message: we're in the "this seems overblown" phase of something bigger than COVID. The disruption is coming.

I'm not writing to disagree with him. I'm writing because I can confirm: it's not coming. It's here. And I've been living it for over a year.

My own "water at chest level" moment

Shumer describes February 5th, 2026 as a turning point — when GPT-5.3 Codex and Opus 4.6 arrived and "something clicked." He compares it to realizing the water has been rising around you.

I know that feeling. But mine started earlier.

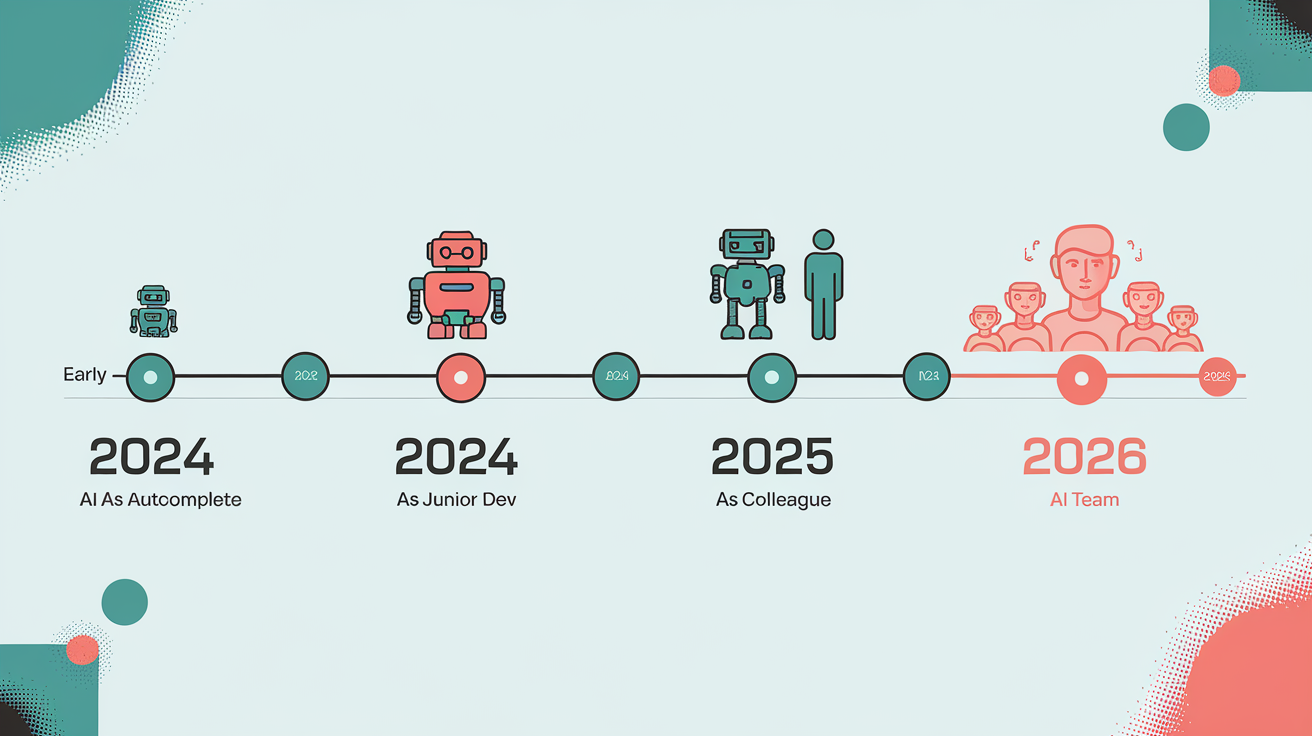

| Period | How I Worked |

|---|---|

| Early 2024 | AI as autocomplete. I typed, it suggested. Every line reviewed. |

| Late 2024 | AI as junior dev. Back-and-forth conversations. Heavy supervision. |

| Mid 2025 | AI as colleague. Delegating features. Reviewing PRs. |

| Now | AI as team. I describe outcomes. They build. I review and ship. |

A year ago, I was going back and forth with AI constantly. Reviewing every line. Fixing obvious mistakes. Treating it like a junior developer who needed supervision.

Six months ago, I started delegating entire features. Write the tests. Implement the migration. Build the component. I'd review the PR like I would from a human colleague.

Now? I describe what I want and walk away. Sometimes for hours. When I come back, it's done. Not a rough draft — the actual thing, tested, working, often better than I would have built myself.

Shumer writes: "I am no longer needed for the actual technical work of my job."

I felt that sentence in my chest.

The orchestrator shift

What changed isn't the AI. It's me.

I stopped thinking of myself as a developer who uses AI tools. I became an orchestrator who directs AI agents.

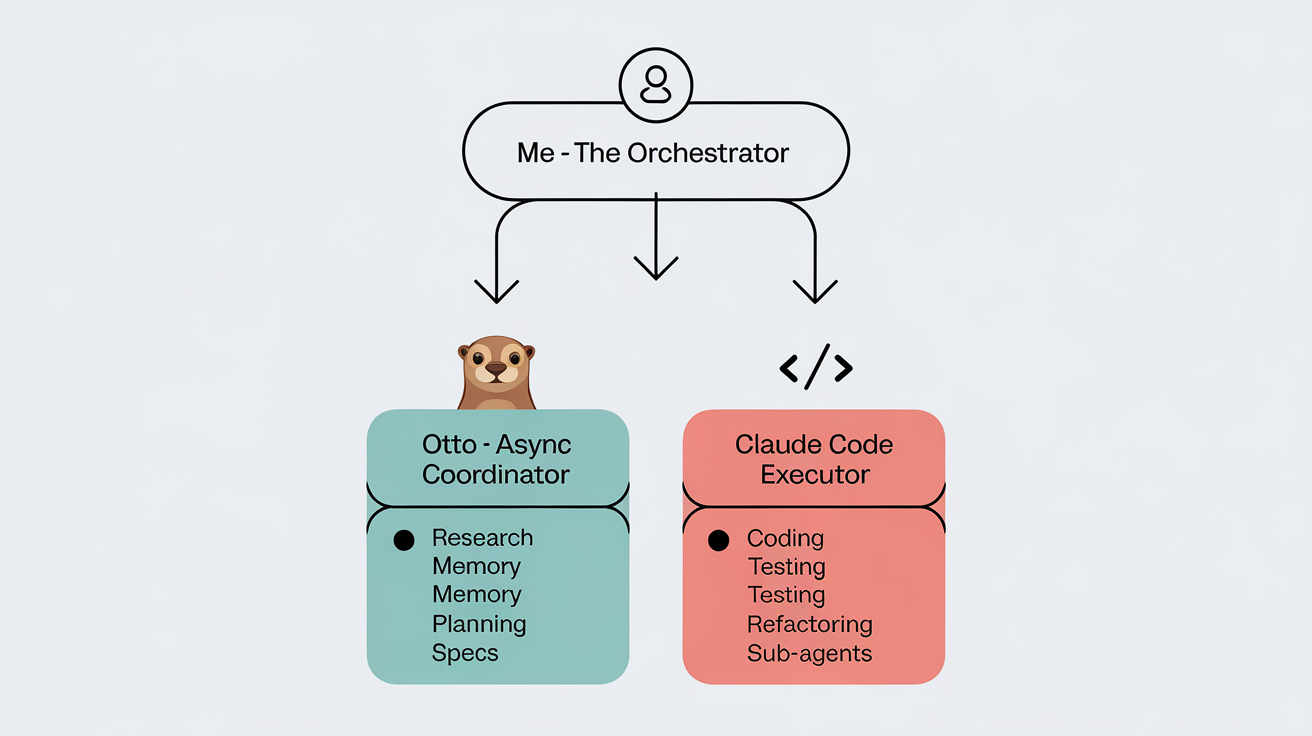

My setup is simple: Otto (my async AI coordinator) handles research, planning, and memory. Claude Code (in my IDE) handles execution. I describe outcomes. They build.

But it goes deeper. Claude Code now supports Agent Teams — multiple AI agents working in parallel under a single team lead. One agent writes the feature, another writes tests, a third reviews the code. They coordinate, they hand off work, they resolve conflicts. I'm not directing individual agents anymore. I'm directing a team of agents.

A year ago, this would have sounded like science fiction. Now it's just... Thursday.

The psychological shift is harder than the technical one. After twenty years of writing code, my identity was wrapped up in being a developer. Now I spend more time describing and reviewing than typing. Some days that feels liberating. Some days it feels like I'm being automated out of my own craft.

Both can be true.

Where Shumer gets it right

The exponential curve is real. Every few months, I update my workflow because the new models obsolete my old assumptions. Tasks I thought needed human judgment now happen automatically. The things I said AI "could never do" keep getting crossed off the list.

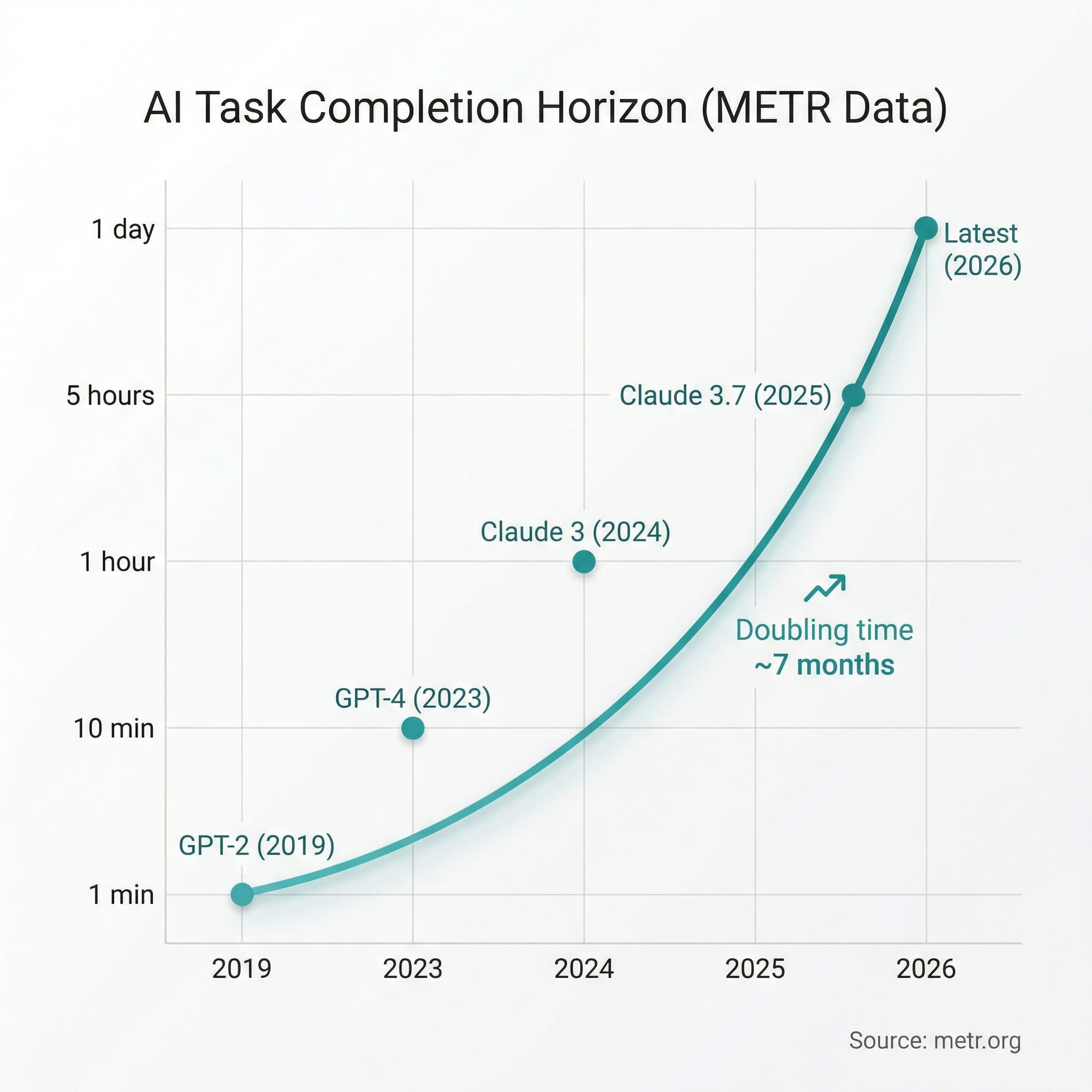

METR, an AI safety research organization, has been tracking this with hard data. They measure "time horizon" — the length of tasks (measured by how long they take human experts) that AI can complete autonomously with 50% reliability.

The numbers are staggering:

| Date | Model | Time Horizon |

|---|---|---|

| 2019 | GPT-2 | ~1 minute |

| 2023 | GPT-4 | ~10 minutes |

| 2024 | Claude 3 Opus | ~1 hour |

| Nov 2025 | Claude 3.7 Sonnet | ~5 hours |

| Feb 2026 | Latest models | Days (estimated) |

Doubling time: approximately 7 months. If this continues, AI that can work independently for weeks arrives within 2-3 years.

The gap between public perception and reality is dangerous. Friends ask me about AI and I give them the polite version. The cocktail-party version. Because the honest version — that I've automated 70% of my coding work in 18 months — sounds unbelievable. But it's true.

"Judgment" and "taste" are emerging. The latest models don't just execute instructions. They make choices. Good choices. Choices that reflect an understanding of what "right" looks like, not just what "correct" looks like. I didn't expect this so soon.

What he doesn't address

Shumer writes for people outside tech, warning them about what's coming. But he glosses over some things that matter to those of us already inside it:

The practical transition. How do you actually go from "doing the work" to "directing the work"? It's not obvious. It requires rethinking your tools, your workflow, your definition of productivity. I spent months figuring this out.

The quality paradox. AI-generated code is sometimes better than my code. Cleaner. More consistent. Better tested. That's a weird thing to admit. I built my career on being good at this. Now "good" isn't enough — the baseline moved.

The identity crisis. If AI writes the code, what am I? Am I still a developer? Does that even matter? These aren't abstract questions when you're staring at a PR that's better than what you would have written.

What I'd tell my past self

If I could go back 18 months and give myself advice, it wouldn't be "learn AI." It would be this:

Stop optimizing for tasks. Start optimizing for outcomes.

I wasted months getting better at prompting. Writing detailed instructions. Treating AI like a vending machine where better input equals better output. That's the wrong mental model.

The shift happened when I stopped asking "how do I get AI to write this function?" and started asking "what am I actually trying to build, and what's the fastest path there?" Sometimes that means AI writes everything. Sometimes it means I write the tricky parts and AI handles the boilerplate. Sometimes it means AI plans while I execute.

Build systems, not habits.

One-off AI usage doesn't compound. Having a setup where AI is always available, always has context, always remembers what you're working on — that compounds. My orchestrator setup took weeks to build. It saves hours every day.

Get comfortable with discomfort.

The weird feeling when AI writes better code than you? That doesn't go away. You just learn to use it instead of fighting it. The identity questions don't resolve — you learn to hold them while still shipping.

Talk to other people going through this.

The most valuable conversations I've had weren't tutorials or courses. They were honest chats with other developers experiencing the same shift. "Is this normal? Are you feeling this too?" Turns out, yes. Everyone's figuring this out in real time.

The question I can't answer

Shumer ends with optimism about opportunity: if you've wanted to build something but lacked skills, that barrier is gone. He's right. I've launched products in weeks that would have taken months before.

But the question I keep coming back to is this: what happens when everyone has these capabilities?

If anyone can build an app in an hour, apps become commoditized. If AI can write, analyze, and decide, what's the durable value of humans in the loop?

I don't have the answer. I'm not sure anyone does.

What I do know is that the worst strategy is to pretend this isn't happening. The water is rising. You can learn to swim, or you can wait until it's at your chest.

I'm choosing to swim.

Let's talk

I'm genuinely curious what others are experiencing:

- What parts of your work have you already automated?

- What's holding you back from going further?

- What would you want AI to handle that it can't yet?

Drop a comment or find me on X. I'm not interested in AI hype or doom. I'm interested in how real people are actually adapting.

Because that's the story worth telling.

This is part of my ongoing series about building in the age of AI:

I offer hands-on consulting to help you resolve technical challenges and improve your CMS implementations.

Get in touch if you'd like support diagnosing or upgrading your setup with confidence.